Call for Effectiveness Research on Competency-Based Education

CompetencyWorks Blog

Despite the increasing prominence of competency-based education (CBE), there is not enough evidence across diverse settings to make strong claims about what works, for whom, and under what conditions. Recently, an article was published in the inaugural issue of the Competency-Based Education Research Journal disseminating a research agenda for competency-based education (A Research Agenda for Competency-Based Education | Competency-Based Education Research Journal). This free and open-source article provides an overview of competency-based education with suggestions for research topics. Educational researchers and educators who do research are encouraged to read this article to get inspiration for areas of research. Together we can build the body of evidence needed to initiate and sustain effective competency-based education.

The Components of the Research Agenda Are Phases of the Research Enterprise

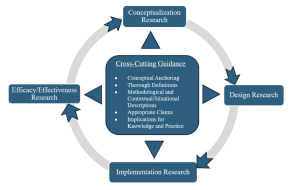

The overview of this research agenda was referenced in a previous blog article, Building a Shared Space of Innovative Research and Learning, and discussed at the second annual research roundtable at the Aurora Institute Symposium. The components of the research agenda (illustrated in the figure) represent a continuum of phases of the research enterprise that together build evidence to support competency-based education.

The reason this is called an enterprise is that a series of studies of each type – conceptualization, design, implementation, and efficacy and effectiveness research studies – are needed to build a body of evidence in support of competency-based education. All of these types of research cannot be done in one study; but a portfolio of research representing each of these types is necessary for knowledge and application to grow.

The Need for Effectiveness Research

Efficacy research and effectiveness research share the same goal of providing evidence that a program meets its goals and produces positive outcomes. In general, the goals of efficacy research involve undertaking the studies under ideal circumstances (e.g., random assignment of participants to the study conditions). It is difficult and rare to implement efficacy research studying educational interventions (like a competency-based education program) under ideal conditions.

Effectiveness research, however, is done under real, live conditions as defined by the program (Singal et al., 2014). Because of the large degree of variation among competency-based programs and the growing numbers of programs, a lot of effectiveness research is needed to examine the impact of each specific program which are embedded in specific contexts and have specific features.

While there is a lot of work to be done to build up the evidence and knowledge for each type of research, the most pressing need is for efficacy and effectiveness research (DeBacker et al. 2024; Parsons & Mason, 2021). But, because of the difficulty in implementing efficacy research, we suggest the call should emphasize effectiveness research. Effectiveness research, which focuses on real, enacted programs, will resonate with policy makers, education leaders, and educators, who can see the results of their programs. In the current landscape of education with the complexity and variety of educational, curriculum, pedagogical, and instructional approaches and increasing resource constraints, people need evidence that certain approaches will work before they decide on the continued use and funding of the program.

Will you contribute to CBE effectiveness research?

If you are leading or involved in a CBE program, consider implementing an effectiveness research study or partnering with researchers to investigate whether and how the program is impacting student outcomes (e.g., more students achieving certification, increased employability, etc.) and/or program-related outcomes (e.g., increased credentialing rates, more diverse learners, higher enrollments, etc.). To be able to attribute any impact on the outcomes to the CBE program, you need to have a strong design for the research study.

We are unable to attribute the outcomes to the CBE program when the research design allows for other things (other than the CBE program) to affect the outcomes. Here are three examples of things that can interfere with our ability to attribute performance on the outcomes to the CBE program and potential mitigation strategies:

- A majority of the learners are using a third-party tutoring service during the CBE program.

- Challenge: Can you determine whether the CBE program produced the certification of the competencies OR the third-party tutoring service?

- Mitigation: Ensure the research design separates those learners with the third-party tutoring from those without the third-party tutoring in the analysis to isolate the impact of the CBE program.

- Challenge: Can you determine whether the CBE program produced the certification of the competencies OR the third-party tutoring service?

- The majority of the learners coming into the program already have mastery of the competencies for the program.

- Challenge: Can you determine whether the CBE program produced the high levels of mastery of the competencies?

- Mitigation: The research design must assess the level of mastery of the competencies before the CBE program and use this information in the analysis of the outcomes to determine if the CBE program was responsible for achieving the outcomes.

- Challenge: Can you determine whether the CBE program produced the high levels of mastery of the competencies?

- Many learners in the CBE program are being concurrently trained at their jobs on the same competencies as the CBE program.

- Challenge: Can you determine whether the CBE program alone contributed to the learners’ achievement of the competencies?

- Mitigation: The research design must identify those who received on-the-job training and use that in the analysis of the outcomes to determine if the CBE program was or was not solely responsible.

- Challenge: Can you determine whether the CBE program alone contributed to the learners’ achievement of the competencies?

Here are a few specific things that can be done in your research study to strengthen the claims of the extent to which the CBE program affected the outcomes. We cannot be 100% confident in our claims about a CBE Program’s impact, because that would entail a perfect rigorous research design. But, there are some things that can be done to strengthen your claims about the CBE program and undertake and approach an effectiveness study.

These suggested strategies are usually additions to the research design and spelled-out in research design publications (see Shadish, Cook, & Campbell, 2002).

- Add a comparison group. This involves selecting and requesting the involvement of a program that does not use CBE. It is important to at least have a thorough description of this program, which will serve as your comparison. Ideally, it should be similar in the content/discipline as the CBE program.

- Add pre-tests. These pre-tests should be aligned (or linked) with the outcomes measuring the content/skills at the onset of the program experience for both the CBE program and comparison program. The pre-tests and the outcomes should not be the exact same instruments – probably alternate forms. First, the pre-tests must measure the state of knowledge, skills, and abilities appropriately before the CBE program, not just the end outcome, because the learners have not had the opportunity to learn the competencies. Second, if the same instruments are used at pre-test and toward the end for outcomes, the performance on the outcomes may be influenced by having taken the same tests at pre-test time.

- Measure implementation and other participant experiences. This involves knowing the extent to which the programs were actually implemented and whether participants did other things during their experience in their respective programs. With this information, you can develop and include an indicator of the level of implementation in the analysis, as well as having insight into any confounding activities that may be affecting the outcomes.

- Collect historical information from participants. If possible, collect relevant information about both groups of participants on factors that may be related to the outcomes. These data can be experiential (e.g., academic and non-academic experiences) and performance (e.g., certifications or academic grades) indicators. Examining these data by CBE and non-CBE group of participants helps evaluate the similarities and differences in the groups. If different, the data can be incorporated into the analysis of the outcomes to help make comparisons between the two groups.

These four sets of activities can enhance the rigor of the research and start moving the research from a descriptive-type of study into an effectiveness research study. But, designing and introducing these in the research study requires more effort and time.

One strategy for undertaking this rigorous research is to form collaborations between researchers and practitioners and increase discussions between policy makers and state-level agencies to assemble a team of researchers and build some capability and support to design and implement the effectiveness research studies needed. There will be a return on this investment of effort in providing useful information about CBE programs to policy makers, education leaders and educators.

Please take up this call for research by forming collaborations, undertaking the research with rigor, and then disseminating your research for all to learn. Best wishes for your effectiveness research journey!

References

DeBacker, D., Kingston, N., Mason, J., Parsons, K., Patelis, T., & Specht-Boardman, R. (2024). A research agenda for competency-based education. Competency-Based Education Research Journal, 1, 1-21. View of A Research Agenda for Competency-Based Education

Parsons, K., & Mason, J. (2021). Charting the course ahead: Towards a postsecondary competency-based education research agenda. In Career Ready Education through Experiential Learning. IGI Global. DOI: 10.4018/978-1-7998-1928-8.ch014

Shadish, W. R., Cook, T. D., & Campbell, D. T. (2002). Experimental and quasi-experimental designs for generalized causal inference. Houghton Mifflin.

Singal, A. G., Higgins, P. D. R., & Waljee, A. K. (2014). A primer on effectiveness and efficacy trials. Clinical and Translational Gastroenterology, 5(e45). doi:10.1038/ctg.2013.13

Learn More

- Co-Developing a Student Survey with Washington Stakeholders

- Building a Shared Space of Innovative Research and Learning

- Learner-Centered Ecosystems as a Path Forward for Public Education: A Convergence of Perspectives and Research

Thanos Patelis is currently a Lead Psychometrician at the Center for Certification and Competency-Based Education at the University of Kansas. He is a seasoned applied researcher, assessment development professional, psychometrician, convener of subject-matter experts, leader of technical teams, and life-long teacher with over 30 years of experience at regional and national institutions and organizations.

Thanos Patelis is currently a Lead Psychometrician at the Center for Certification and Competency-Based Education at the University of Kansas. He is a seasoned applied researcher, assessment development professional, psychometrician, convener of subject-matter experts, leader of technical teams, and life-long teacher with over 30 years of experience at regional and national institutions and organizations.