Using Competency Performance Levels as Predictors of College Persistence in Competency-Based Schools

CompetencyWorks Blog

School boards, policymakers, parents, and community members continue to grapple with the best way to measure our schools’ success. We need ways to accurately assess how our students and schools are performing over time. But none of the metrics currently in place accurately reflect an individual student’s knowledge, skills, and mindsets — their ability to persevere through setbacks and failures, their ability to solve problems and think critically, their ability to manage tasks and deadlines, and their ability to set goals and seek support and resources to achieve them.

The 2014 New America Education Policy Brief, “The Case Against Exit Exams,” stated, “New evidence has reinforced the conclusion that exit exams disproportionately affect a subset of students, without producing positive outcomes for most,” and that they “have tended to add little value for most students but have imposed costs on already at-risk ones.” While most states have moved away from exit exams as a requirement for high school graduation, many states still use standardized testing to rank or grade school performance.

Standardized tests measure a student’s ability to take tests, sometimes in a set amount of time, but they do not necessarily measure their knowledge and absolutely do not measure their ability to apply that knowledge in the real world. Other available metrics (e.g., honors classes, AP classes, GPA) also fail to reflect a deeper sense of what our young people know and are able to do.

How do we truly measure the impact of the education our schools are providing and whether or not we are successfully preparing students for life after high school?

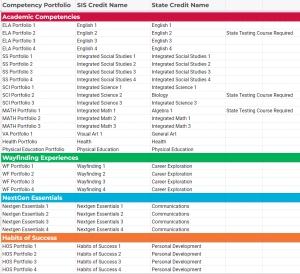

This question is one that Building 21 is trying to answer. We have been studying the data from our Building 21 Allentown competency-based education (CBE) high school. We opened this school in the 2015-2016 school year with just 9th graders and grew a grade every year until we had our first graduating class in June of 2019. Students graduate from our school by demonstrating mastery in their competency portfolios, not by taking traditional courses. Students are assessed on 33 competencies in 9 competency areas — English Language Arts, Social Studies, Science, Math, NextGen Essentials, Habits of Success, Visual Art, Health, and PE. Students also complete a set of Wayfinding experiences and are assessed on Math concept lists. The table below lists our competency portfolio requirements for graduation:

Students work on the same competencies and continua each year, across all learning experiences, striving to make progress and growth and to reach a level 10 on our continua, which indicates college and career readiness. As we continue to expand our competency model in schools across the country, we want to analyze our competency-based approach to see if we truly are preparing our students for post-secondary success.

Our operating hypothesis when we started Building 21 was that our competency ratings, comprised of performance-based assessments requiring students to apply their knowledge in authentic ways, would be a better barometer of college and career readiness than a handful of standardized tests with the inherent limitations noted above. And now, with three cohorts of students having graduated, we finally have the data to test this hypothesis.

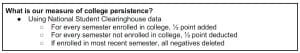

We evaluated the level of correlation between our students’ performance levels on our competencies and their post-secondary outcomes. We used data from the National Student Clearinghouse across our first three graduating classes to determine the students who went to college and how long they stayed in college. We developed a college persistence measure determined by the number of semesters a student was enrolled in a two- or four-year college or university after they graduated from high school.

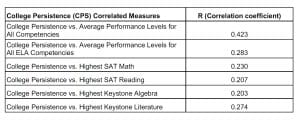

We then conducted a Pearson R correlation of various data points related to college persistence — all competency performance levels, competency performance levels by area, GPA, SAT scores, and Keystone Exams (Pennsylvania’s high school state tests for Literature, Algebra 1, and Biology). Our initial findings are encouraging.

We found there is a moderate to strong positive correlation between the average performance levels on all of our competencies and college persistence. The correlation was lower when each individual competency area was correlated with college persistence separately (e.g., see ELA in the table below). The correlation to college persistence was also lower for SAT scores and the Keystone exam. The table below shows the results of our analysis:

Our findings show that our average competency performance levels predict college persistence about twice as well as SAT scores and Keystone exams do.

Our initial correlation data has not been validated by an external research partner yet — we are working on this now. However, the moderate to strong positive correlation between average performance levels on all of our competencies and college persistence, and its superiority to the correlation values for SAT and state exams, leads us to believe that a strong performance (Level 10 or higher on our continua) on all of our competencies, both academic and nonacademic, is a better predictor of post-secondary success for our schools than other traditional measures.

CBE schools are under pressure to prove their models work. Even though we know that the traditional model of school is not working for many students and that we need to do something different, actually changing your model and trying something different invites a higher level of scrutiny. We are excited to continue our research and analysis of our competency model and the relationship between our competency performance levels and post-secondary success and hope to publish future findings.

In my next blog post, we will take a deeper dive into understanding the why behind our continua, or competency-based learning progressions, and the power they hold for seamless K-12 instruction and assessment in CBE schools.

For those of you who read one of my previous blog posts, Building 21’s Studio Model: Designing Learning Experiences for Engagement and Impact, and are interested in studio design, Building 21’s Learning Innovation Network is running a virtual Studio Design Institute this summer from August 1st-4th. Here is a link to our website for more information and to register as a participant.

Sandra Moumoutjis is the Executive Director of Building 21’s Learning Innovation  Network which is designed to grow and support a community of schools and districts as they transition to competency-based education. Through professional development and coaching, Sandra supports schools and districts in all aspects of the change management process. Sandra is the co-designer of Building 21’s Competency Framework and instructional model. Prior to working for Building 21, Sandra was a teacher, K-12 reading specialist, literacy coach, and educational consultant in districts across the country.

Network which is designed to grow and support a community of schools and districts as they transition to competency-based education. Through professional development and coaching, Sandra supports schools and districts in all aspects of the change management process. Sandra is the co-designer of Building 21’s Competency Framework and instructional model. Prior to working for Building 21, Sandra was a teacher, K-12 reading specialist, literacy coach, and educational consultant in districts across the country.